With all the efforts you’re putting in to increase website traffic, why would you want to ask search engines to NOT crawl your webpages.

Or at least this is what so many bloggers and webmasters think.

And this is the reason why so many of them never care to know *really* what are Robots.txt files, let alone learn how to create one.

I would know… because I was one of them a couple of years back.

Talking about what does Robots.txt means, it’s basically a “Do Not Enter” sign for the search engines.

It’s a file – usually stored in the main directory of the server – that tells the search spiders which webpages/links on your domain TO NOT CRAWL.

How Robots.txt works?

Again, the concept is extremely simple. Search engines do not crawl all the links that they come across like an outlaw.

Before crawling, the spiders ensure they have permission to do so.

So, when they are about to access a page, the first thing they look is at the Robot.txt file to make sure they have permission to access and crawl the page.

In this file, you can command Google, Bing, and other bots to KEEP AWAY from a particular webpage or an entire section when crawling.

How to create a Robots.txt file?

A generic Robots.txt file looks like:

User-agent:*

disallow:/terms-and-conditions.html

Here ‘User-agent’ with the asterisk value (*) says the following command is for ALL the search bots.

In case, if you DON’T want Google to crawl the page but are fine if Bing crawls it, use User-agent ‘Googlebot’. If it’s the opposite (no to Bing and Yes to Google), the User-agent value would be ‘Bingbot’.

Different search engines have different crawlers/bots. If you don’t want any specific search engine to crawl your page, you have to point that out to their bots by mentioning their name in the ‘User-agent’ space.

For now, let’s stick to (*), which commands ALL search engines.

The ‘disallow’ part is the actual command that asks the bots to NOT crawl the ‘Terms and Conditions’ page on your domain.

You can include multiple ‘disallow’ syntaxes.

You can prevent crawling by either blocking one particular webpage (like above) OR any specific category/label, like…

User-agent:*

disallow:/category1

disallow:/category 2

There’s also an ‘Allow’ syntax btw.

Say, if you want to make a category un-crawlable but there’s one specific page in that category that you would like search engines to crawl, you can use the ‘Allow’ directive here to make that possible.

The Robots.txt file will look something like…

User-agent:*

disallow:/category1

allow:/category1/product1

As and how you require, you can use the Robots.txt file flexibly to make your pages un-crawlable.

Including sitemap in Robots.txt file

It’s also a nice practice to include your sitemap in the robots.txt file.

Keeping the technical part aside, think of it…

Before crawling a website, the search bots enter the Robots.txt file first (if the file is located in the main directory) to see whether they have permission to crawl or not.

If they find the XML sitemap on the first file that they crawl on your domain – sitemap, which includes a central list of all the URLs on your website – would it not facilitate quick and easy crawling?

Of course, it would help tremendously.

So, it makes more sense to have your Robots.txt and sitemap.xml hand-in-hand. Tell the search bots what not to crawl and then what to index.

Now, including the sitemap in robots.txt is fairly straightforward. You simply add another line to your existing file:

Sitemap: htt p://www(dot)yourwebsitename(dot)com/sitemap.xml

Change the above link if the URL of your sitemap varies.

Now, after XML sitemap inclusion, here’s how should robots.txt look like now in the most basic form:

User-agent:*

disallow:/category1

Sitemap: htt p://www(dot)yourwebsitename(dot)com/sitemap.xml

If you have multiple sitemaps, add multiple links. Like:

User-agent:*

disallow:/category1

Sitemap: htt p://www(dot)yourwebsitename(dot)com/sitemap.xml

Sitemap: htt p://www(dot)yourwebsitename(dot)com/sitemap.xml

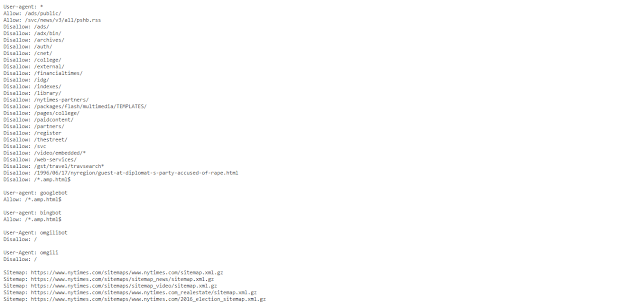

Here’s how the Robots.txt file of the New York Times looks like:

Everything sounds good till now?

GREAT!

Now before moving ahead, it’s also important that we make proper distinctions between Robots.txt and Noindex meta tag.

Because many people confuse robots.txt with noindex directive.

Robots.txt vs. Noindex

If you notice in the above part, all I am talking about is ‘crawl’ and NOT ‘index’. And this is where the biggest difference between Robot.txt and Noindex meta tag lies.

When you use Robot.txt file, the search engine DOES NOT crawl that particular webpage — directly.

Now Imagine: You publish a new article and include in it the link of a particular webpage that you originally wanted to be un-crawlable.

Now if you Inspect this published article on Search Console and request indexing, the spider would also crawl this particular ‘un-crawlable’ URL before visiting the robots.txt file because you asked for it from the front.

And when it is crawling, it will also index it.

And if indexed, there’s a decent chance that it will show on SERP (although it will be extremely difficult).

Another hypothetical situation is if someone links to this particular ‘un-crawlable webpage’ from their own site. The search bots might jump from their URL to here, avoiding the robots.txt.

Meaning, just because you’re asking search bots to not crawl a page from one doorway doesn’t necessarily mean they won’t crawl in the page through another entryway.

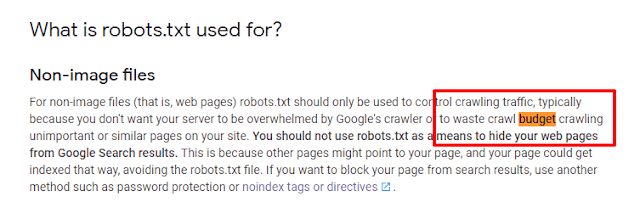

This is why even Google says, “You should not use robots.txt as a means to hide your web pages from Google Search results.”

Because Robots.txt doesn’t hide your page from search engines, it might get indexed by search spiders and show up on SERP.

This is the flaw that the Noindex meta tag fixes. It does exactly what its name says.

Noindex tag ensures the search bots DO NOT index your page. It ensures the search engines DO NOT list that page on their result pages.

A noindex meta tag looks something like this:

<meta name= “robots” content=“noindex”>

Here, ‘robots’ means ALL search engine crawlers.

Again, if you want one search engine to index the page and others do not, you have to use the crawler’s specific name here you do not want to index this page.

Content ‘noindex’ is a basic directive that the crawlers are not allowed to index the particular webpage.

Still with me?

It’s okay if none of this is making any sense. Bookmark this post and give the section a re-read sometime later. Continue with the rest.

Why Robots.txt is important?

It’s another very common question that people toss.

After all, isn’t it a good thing if the search bots crawl the page? Would it not help the search ranking?

The simple answer is NO.

The reason why you should use the Robots.txt file is that you don’t want it to crawl webpages that aren’t important.

There are many pages on your website that are irrelevant and are meant for backdoor purpose.

They aren’t exactly meant for visitors’ access. The thank you page, internal lead generation page and so many more…

Using this exclusion protocol for each is a fitting idea here.

Other benefits of Robots.txt include:

- Cover all the duplicate content on the website

- To inform search bots about the location of the XML Sitemap

But aside from these minor benefits, there’s a very direct SEO advantage of using the Robots.txt file.

Are You Wasting Crawl Budget?

Here’s how Google explains what Robots.txt file is used for…

The key line is—“(Not) waste crawl budget crawling unimportant or similar pages on your site.”

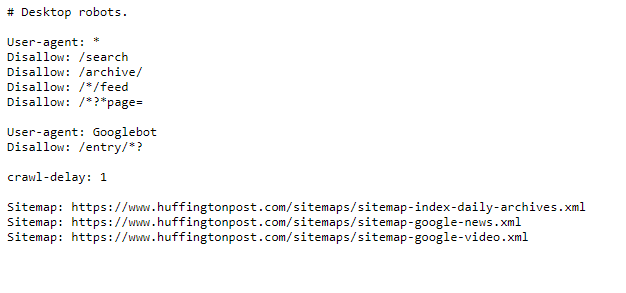

Here’s a thing: Big websites like Huffington Post publish 100s of articles every day. And they have been here for years now. Meaning, they are sitting on a gigantic pile of content.

Sadly, at large, the old articles aren’t really much of use to them because they focus on time-sensitive topics.

So, when they index their domain, do you think the search bots crawl all the thousands of old posts? Or for that matter, do you think it prioritizes the latest, as well as the old posts, equally?

The answer is: NO.

As is evident in Google’s explanation above, each website gets a “crawl budget”.

Now, assumingly, the amount of this budget depends on a range of factors, like backlinks, domain authority, PageRank, brand mentions, and more.

Meaning, large websites like Huff Post likely get a large crawl budget than newer, less-renowned websites.

However, everyone gets a (limited) budget nonetheless.

Now imagine if you’re spending this crawl budget on old, irrelevant, unnecessary URLs. Wouldn’t that be a waste of it?

Of course, it is!

This is where Robots.txt for SEO comes in. It makes URLs un-crawlable. Hence, saving the crawl budget.

Talking about our example of the Huffington Post, they certainly wouldn’t make their old posts un-crawlable.

However, to counter the challenge of duplicate contents, which is a big source of crawl budget wastage, they have included archive, search, feed, and referral extensions in the Robots.txt file. Here is it:

Similarly, if you have multiple categories/labels, an internal search option, a long archive, and other irrelevant URLs on your domain—it’s a great idea to lock them in the Robots.txt file.

This basic step can ensure your crawl budget is preserved and used well.

And this is one of the core and emerging reasons why you MUST use the Robots.txt file very carefully and smartly to positively affect the search ranking of your website.

Quick (And Basic) Questions

Where should Robots.txt be located?

It must be located at the root of the web host.

It must be located in the main directory of the host, i.e. www(dot)yourwebsitename(dot)com/robots.txt

…And NOT in subdirectory like www(dot)yourwebsitename(dot)com/category/robots.txt.

HOW DO I FIND MY ROBOTS TXT FILE?

Simple. Type: www(dot)yourdomainname(dot)com/robots.txt. In case, if you don’t find the file, simply use this tool at sitechecker.pro. If the file exists, the tool will come up with the right URL.

HOW TO CREATE A ROBOTS.TXT FILE FOR SEO?

You can use your regular notepad to create the file. Know what to allow and disallow, create the syntax like mentioned above, and then save the file as ‘robots.txt’. Then upload it to the main directory of the host. Avoid using Word Processor or MS Word. Instead of writing the directives yourself, you can even use a Robots.txt generator to create the file. And then you can make changes to that.

Here’s a good Robots.txt generator.

Save the result in the notepad and save it on the root of the host.

Conclusion

Now you know all the what’s, how’s and why’s — time for some action!

Check the Robots.txt file on your domain. See what URLs are made un-crawlable. And then decide which new ones need to be locked in there.

If you don’t already have one, create it. It’s extremely simple. And then smartly use it to boost your SEO. This is a part of 9-post series on Ignored SEO Techniques. Click below and read more:

- Is Low Click-Through Rate Destroying Your SEO Strategy?>

- What Is The Perfect Keyword Density Percentage?

- Your Font Is Hurting Your Website Ranking (Fix It!)

- Revamp, Repurpose Your Old Content to Boost SEO

- Do Outbound Links Help SEO?

- Level-up Your On-Page SEO Game With Whitespace

- How To Use Interactive Contents to Increase SEO Ranking?

- Website Speed in SEO: Does Your Site Load in 2 Seconds?